Roadmap to Global Certification in Red Hat

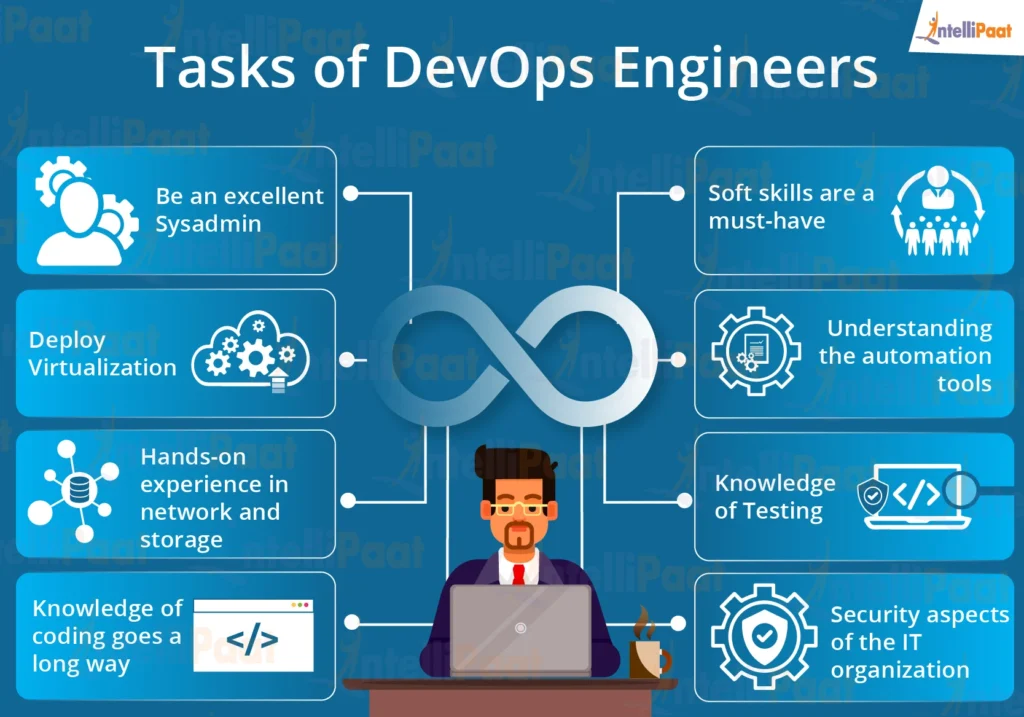

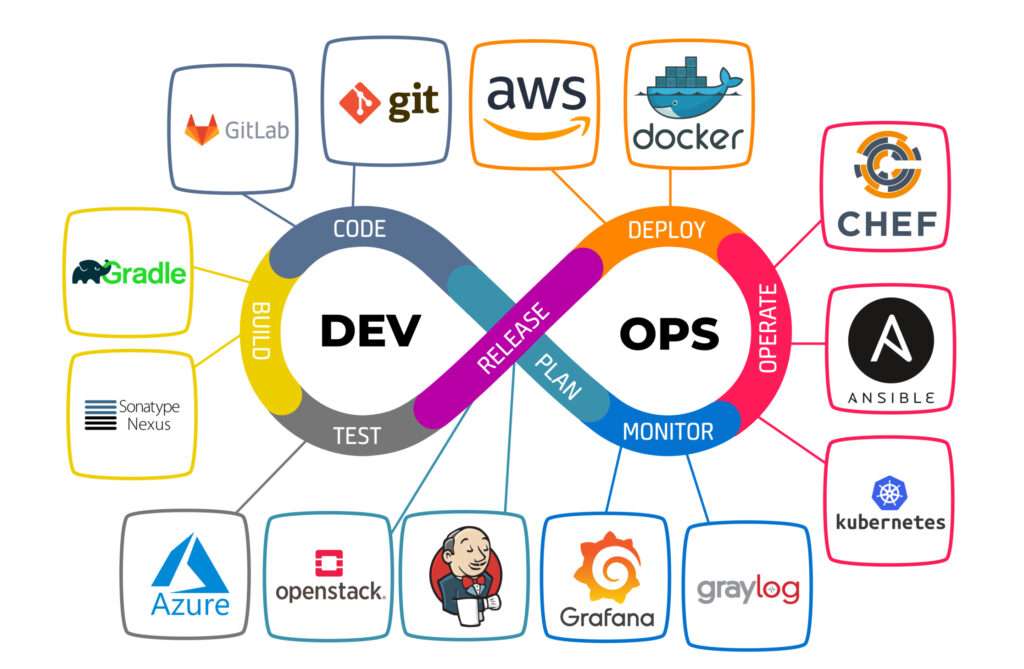

Roadmap to Global Certification in Red Hat – Your Guide to Becoming a Certified Red Hat Professional In the fast-evolving world of Linux and open-source technologies, Red Hat certifications stand out as a gold standard for validating your expertise. Whether you’re an aspiring system administrator, cloud engineer, or DevOps professional, Red Hat provides a structured path to help you advance in your career. In this blog, we’ll explore the Red Hat certification roadmap, the skills you need, and how to prepare for success in the global certification landscape. Why Red Hat Certification? Red Hat is the world’s leading provider of enterprise open-source solutions. Organizations globally use Red Hat Enterprise Linux (RHEL), OpenShift, Ansible, and other Red Hat tools for stability, security, and scalability Red Hat Certification Track 1. Training The foundational stage where learners get access to structured content. What’s Included: o Dedicated lab setup to practice real-world tasks o Ebook covering theoretical and practical knowledge o Guided exercises for hands-on learning 2. Choose a Learning Mode: After the initial training setup, learners can choose either of the two tracks: Instructor-Led Training Description: Live sessions conducted by Red Hat certified trainers Advantages: o Real-time interaction o Doubt clearing with experts o Structured class timing Self-Paced Learning Description: Pre-recorded videos taught by Red Hat professionals What’s Included: o Access to video modules o Ebook and lab access, just like instructor-led o Flexibility to learn at your own pace 3. Certification Final Step: After completing training (via either method), you proceed to certification. Includes: o Exam coupon (valid for 1 year) o One free attempt for the certification exam Administrator New to Linux – RHCSA Certification Path This track is for beginners with little or no experience in Linux, and it outlines a structured journey from basic system administration skills to Red Hat certification 1. Red Hat System Administration I (RH124) Target Audience: Complete beginners Focus: Introduction to Linux commands, shell usage, basic file management, and system navigation. Goal: Build foundational knowledge of Red Hat Enterprise Linux. 2. Red Hat System Administration II (RH134) Prerequisite: RH124 Focus: Intermediate skills like user management, security, process control, and service configuration. Goal: Prepare learners to manage RHEL systems in real-world scenarios. 3. Red Hat System Administration III (RH199) (Optional but Recommended) Audience: Those seeking additional preparation before certification Focus: Covers advanced topics and reinforces hands-on skills. Installing and configuring RHEL Managing users, permissions, and software Working with firewalls and storage Writing shell scripts Tip: This is often bundled as a RHCSA Rapid Track course for those with some prior experience. 4. Red Hat certified System Administrator (EX200) RHCSA Certification Type: Performance-based exam – 3 hours’ exam Exam coupon valid for 1 year and one free attempt Senior/Experienced Linux Administrator – RHCE Certification Path This track is designed for professionals who already have some hands-on Linux experience and want to move into advanced system administration and automation roles 1. RH199 – Red Hat Certified System Administrator (Rapid Track) Audience: Professionals who already know basic Linux Purpose: Combines RH124 + RH134 into a fast-paced, intensive course Goal: Prepares you for RHCSA quickly 2. EX200 – RHCSA Certification (Mandatory) Type: Performance-based exam Topics: File systems, users, SELinux, storage, network services Pre-requisite: Must pass to move to RHCE 3. RH294 – Red Hat System Admin III (Focus: Ansible Automation) Focus: Teaches how to automate tasks using Red Hat Ansible Automation Automating tasks using Red Hat Ansible Automation Managing large infrastructure with playbooks Implementing advanced Linux networking and services Goal: Manage complex environments through code (Infrastructure as Code) 4. EX294 – RHCE Certification Type: Practical hands-on exam Focus: Automating Linux system administration using Ansible Outcome: You become a Red Hat Certified Engineer (RHCE) 5. Digital Badge & Certification ID Validity: 3 years Recognition: Globally accepted digital credential showing your expertise in Linux automation Choose a Specialization Path After RHCE or equivalent experience, you can choose to specialize in one or more of the following tracks based on your career interest: 1. Automation & DevOps Specialization Focus: Automating IT operations and infrastructure using Ansible Ideal for: DevOps Engineers, Automation Architects Key Certification: Red Hat Certified Specialist in Ansible Automation (EX407) Red Hat Certified Specialist in Advanced Automation 2. Security Specialization Focus: Hardening Linux systems, Managing security policies, auditing, and SELinux Ideal for: Security Engineers, Compliance Officers Key Certification: Red Hat Certified Specialist in Security: Linux (EX415) 3. Cloud & Containers Specialization Focus: OpenShift, Kubernetes, and cloud-native deployments Ideal for: Cloud Engineers, Platform Engineers Key Certification: o Red Hat Certified Specialist in OpenShift Administration (EX280) o OpenShift Developer or Site Reliability Engineering (SRE) badges 4. Infrastructure & Virtualization Specialization Focus: Advanced system design, troubleshooting, and virtualization Ideal for: System Architects, Infrastructure Admins Key Certification: Red Hat Certified Specialist in Virtualization (EX318) 5. Application Development Specialization Focus: Developing, integrating, and deploying applications on Red Hat platforms Ideal for: Java Developers, Integration Architects Key Certification: o Red Hat Certified Specialist in Application Development o Red Hat Certified Specialist in Integration Path to RHCA – Red Hat Certified Architect The Red Hat Certified Architect (RHCA) is the highest level of certification offered by Red Hat. It is designed for experienced IT professionals who want to master multiple Red Hat technologies and prove their ability to architect and manage complex enterprise systems. To earn RHCA, you must complete RHCE + 5 specialist certifications. There are two tracks: RHCA in Infrastructure RHCA in Enterprise Applications This level demonstrates your mastery and makes you a go-to expert in your organization RHCA at a Glance RHCA Certification Path 1. Start with a Prerequisite You must first become either: o RHCE (Red Hat Certified Engineer) or o A Red Hat Certified Specialist in Application Development 2. Choose Your RHCA Track Red Hat offers flexibility. You can build your RHCA in one of the following focus areas or combine multiple: RHCA in Infrastructure Focus: Advanced Linux administration, clustering, satellite server, virtualization RHCA in Cloud Focus: OpenShift, Kubernetes, hybrid cloud management RHCA in DevOps Focus: Automation

Roadmap to Global Certification in Red Hat Read More »